Scaling Woven’s Hiring Assessments with AI

Woven, a technical interview platform, needed to improve their product’s ability to scale.

We increased the percentage of assessments which were automatically scorable from 64% to 77%. Our work helped Woven scale their product and, in turn, raise their next round of funding.

What We Did

Tech Stack

Opportunity

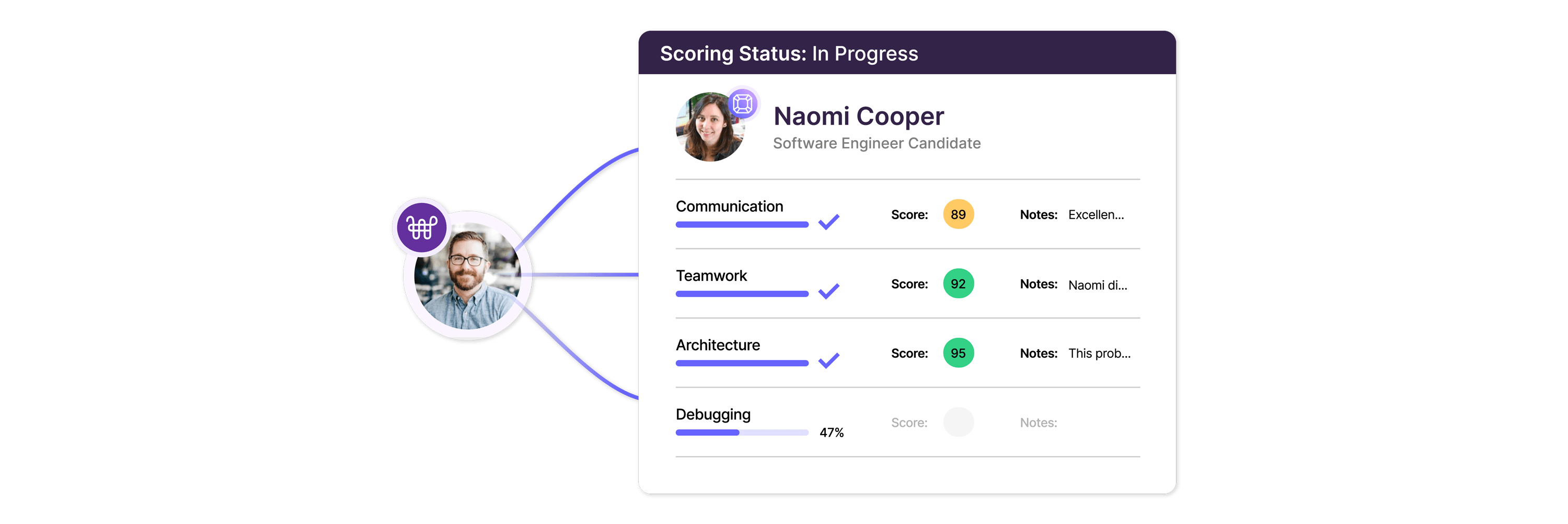

Woven is a technical assessment platform. They make companies more effective in their hiring by taking skill assessments to the next level.

Woven needed help to improve their product’s ability to scale through automation before raising their next round of funding. They have incrementally been making their assessments scorable by AI (rather than a human), which allows them to process more assessments as they grow.

Solution

To maximize our impact, we identified a key metric we could meaningfully improve: the percentage of skill assessment scenarios that could be automatically scored.

Woven was able to automatically score 64% of assessments before we started.

Woven’s system prompts candidates with a set of scenarios to answer as if they are on the job. For example, responding to a support ticket about a bug or writing code to calculate a billing invoice.

There were several areas of the product we could work on to improve this metric. We focused on groups of similar scenarios that weren’t performing well so that we could boost the accuracy of entire groups, rather than individual scenarios.

We identified several low-accuracy scenarios and analyzed their scores. We used Natural Language Processing (NLP) techniques including stemming, summarization, padding, and topic extraction to normalize the text, remove human bias, and make the text more machinable. We also used Machine Learning (ML) techniques including coarsening and binning to improve the ML system’s accuracy.

One scenario wasn’t scorable by AI because the test questions had recently been changed, so data from the old questions was not usable when scoring the new questions. We used automatic code rewriting and transfer learning to make the old data relevant to the updated test questions. This spared Woven dozens of hours of manual labor and provided a roadmap for how to use existing data when test questions change.

For several other scenarios, we found problems with the scoring data itself that led to lower accuracy. These problems were often the result of human graders having different interpretations of the grading rubric. In these instances, AI wasn’t actually the most helpful approach. Instead, we wrote regular expressions to improve these groups of scenarios. We further identified several commonly-misunderstood scoring rubric items which could be replaced by unit tests, eliminating ambiguity.

To wrap up our engagement, we summarized our findings and delivered prototypes Woven could implement. We also provided a plan with next steps Woven can take in the short-, medium-, and long-term as well as cautions against potential pitfalls.

Results

The combination of approaches we took proved successful. During our time together, we:

- Increased the goal metric (assessments scorable by AI) from 64% to 77%

- Improved Woven’s use of ML

- Introduced static code analysis and NLP techniques when they were more accurate than ML

- Identified data cleanliness issues

Our engagement helped Woven scale their product and, in turn, raise their next round of funding.

Check out our Podcast with Woven

Recent Case Studies

Replacement for Excel: Custom Software to Wow Clients and Financial Advisors

Implementing Enterprise DevOps Solutions

Let’s develop something special.

Reach out today to talk about how we can work together to shake up your industry.