I learned about these open source and commercially licensed tools for developers at NeurIPS 2024. Most of the tools on this list are new to me, but not new to the world. You can deploy and use them today!

…pssst – if your manager or your client would rather you make something from scratch instead of starting with an off-the-shelf tool, send them this article.

Agent Frameworks

AutoGen

CrewAI and LangGraph are effective agent frameworks. Of the three, AutoGen strikes the best balance between ease-of-use and ease-of-configuration. The docs are well-organized, and the project’s maintainer is very responsive. I recommend you start with the AgentChat quickstart before you dig into AutoGen Core.

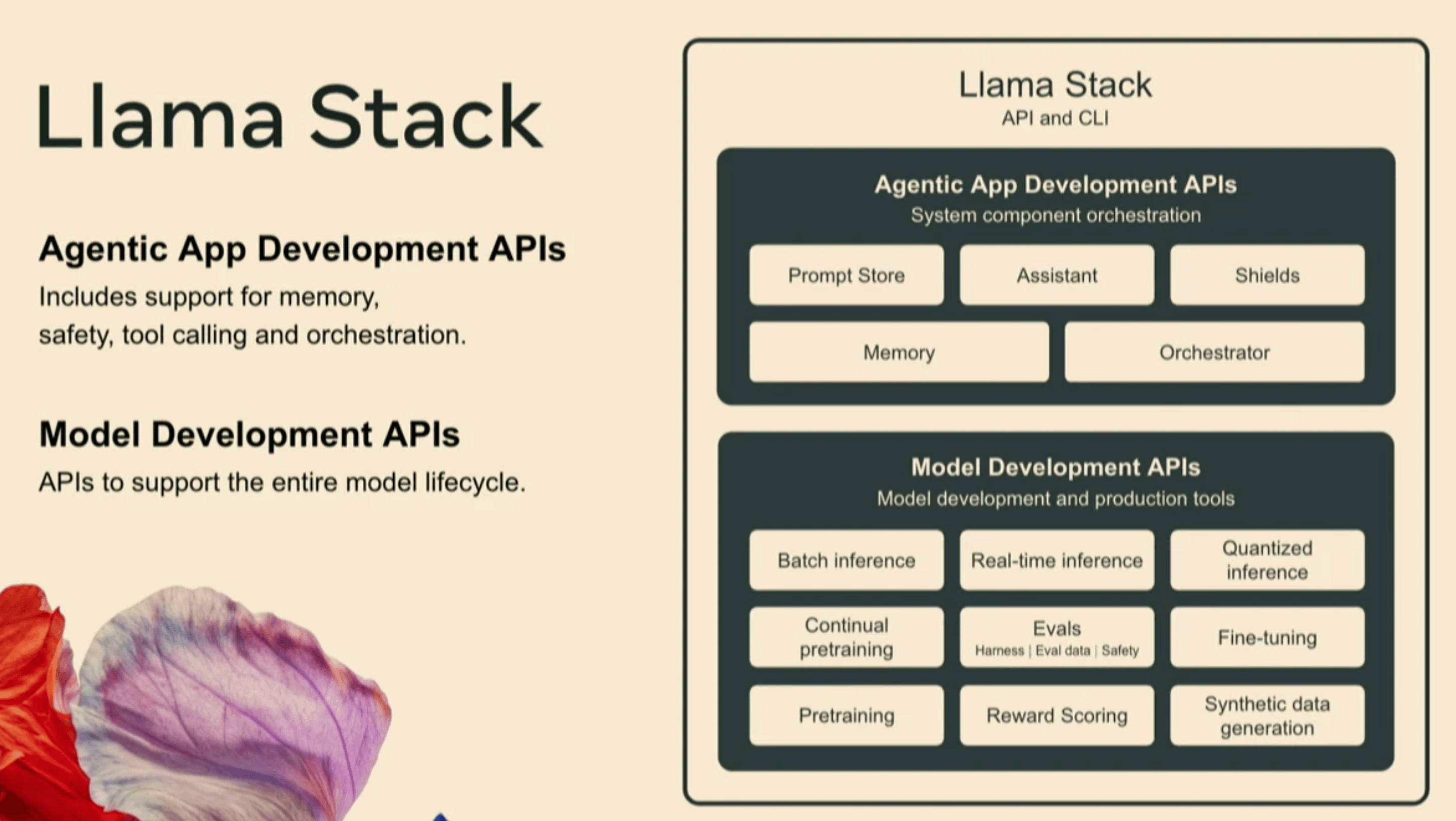

LlamaStack

LlamaStack is a comprehensive framework for developers building applications with AI agents powered by Llama models. What sets it apart is its dual focus: it provides APIs not only for building agent applications but also for developing the underlying models themselves.

The framework’s unique approach to model development is particularly valuable. While LLMs are often treated as interchangeable components, each model family has its own technical peculiarities that developers must handle. LlamaStack’s APIs abstract away Llama-specific challenges (like special token handling and pre-training corpus matching), allowing developers to focus on higher-level tasks like model training and optimization.

Time Series Forecasting

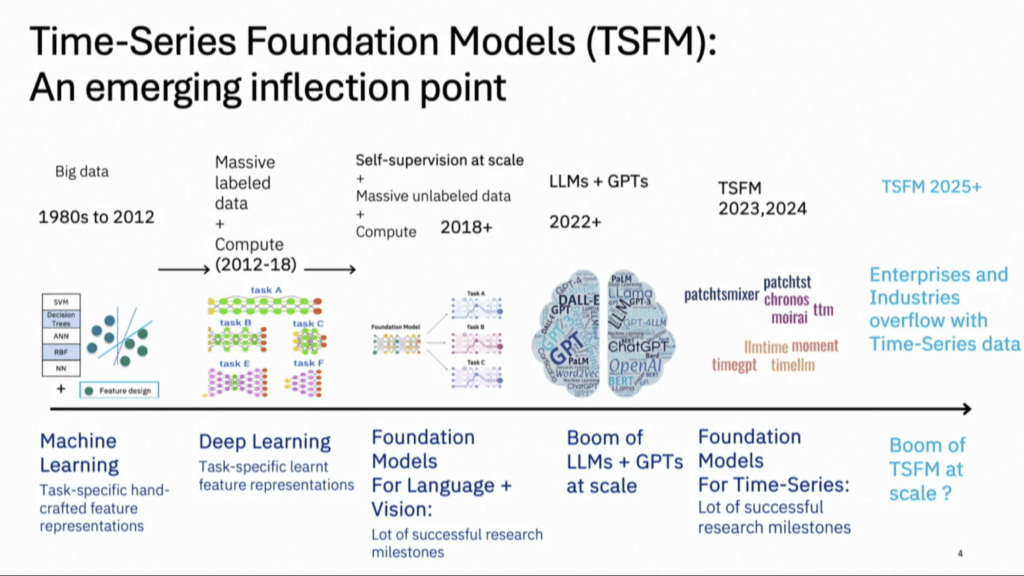

Time Series Foundation Models

Training a model for your time series problem requires a large dataset. This can be problematic if collecting the data is difficult, or if the event you are trying to classify, detect, or predict does not happen all that often. To our collective relief, 2023 and 2024 saw the release of several foundation models for time series problems.

Tiny Time Mixers (TTM)

IBM recently released the TTM (Time Series Transformer Model) family of foundation models, developed by Vijay Ekambaram’s team. Despite having only 1 million parameters, these models outperform larger billion-parameter models on several time series benchmarks, particularly in few-shot and zero-shot tasks.

Two key advantages of TTM models are:

- Cost and time-effective fine-tuning: Due to their small size, you can train them with a CPU in a few minutes

- Built-in interpretability: The models can show which input signals had the greatest influence on their predictions

However, it’s important to note that while TTM performs well on benchmark datasets, your specific use case may yield different results. The models are available under a commercial open source license.

The TTM Hugging Face pages note that “The current open-source version supports only minutely and hourly resolutions(Ex. 10 min, 15 min, 1 hour.)”, which suggests that we may see a new suite of models from IBM with greater granularity and larger context windows soon.

Moment

Moment is a family of time series foundation models from CMU. Parameter range: small 37M, base 113M, large 346M. Check out their tutorials for forecasting, classification, anomaly detection, imputation, and representation learning on the GitHub and HuggingFace pages linked below.

Time-LLM with Neural Forecasting

Time-LLM is not a time series foundation model. It is an layer that transforms time series data into an LLM-friendly format. This solution is more compute-intensive than deploying a time series model but if you are unfamiliar with modeling time series problems it will be easier to use faster to deploy. You can use it via the Neural Forecasting Library, a unified interface for over 30 time series models.

- Time-LLM Medium tutorial

- Time-LLM arxiv paper

- predictive maintenance with Neural Forecasting

- probabilistic forecasting with Neural Forecasting

If you want to learn more about modeling uncertainty with probabilistic forecasting, Check out these talks from SEPeers Rob Herbig and Chris Shinkle.

LLM4TS

Not a useable library yet, LLM4TS is a framework that purports to do what Time-LLM does at a lower level in the model. Plus, Sagar Samtani, one of the authors, is an IU student, which instills a Hoosierly pride in my corn-fed heart.

Vision

nerfstudio

Neural Radiance Fields (NeRF) are neural networks that generate 3D scenes from a collection of images. Many variations have improved upon the original paper. Berkeley’s KAIR lab created nerfstudio to “modularize the various NeRF techniques as much as possible”. You can use nerfstudio to create a NeRF without deep knowledge of any given NeRF technique, provided you have a smartphone and enough daylight to take 100-150 images of a 3D scene.

nerfstudio’s installation instructions suggest that that MacOS is not supported, but this GitHub issue demonstrates that nerfstudio will run on a mac with Apple silicon.

OpenVLA

Have you ever wanted to build a robot that can take actions given visual and text input?

Model Evaluation

Prometheus

Kim’s lab at CMU created a framework for evaluating LLMs called Prometheus. They also released a Mistral finetune for model evaluation, called Prometheus 2.

EvalAssist

My homespun agent evaluation pipeline could be described as a panel of LLM-as-judges. It is, in reality, a mess of containerized agents and BASH scripts. If only to reduce my blood pressure, I would like to give EvalAssist a try.

RewardBench

Evaluate the capabilities and safety of your reward model with Allen AI’s RewardBench.

Training Process

Label Studio

When I asked researchers about the tools they use to label data, they recommended Label Studio. Here’s a blurb from their GitHub page:

Label Studio is an open source data labeling tool. It lets you label data types like audio, text, images, videos, and time series with a simple and straightforward UI and export to various model formats. It can be used to prepare raw data or improve existing training data to get more accurate ML models.

EVA

Full fine-tuning is silly. Parameter efficient fine-tuning (PEFT) means that only a subset of the model’s weights will be finetuned. Low Rank Adapters (LoRA) appeared as a method to train an even smaller number of neurons. But how do we initialize the weights of an adapter, you ask? With EVA. You can even use EVA with DoRA.

Enjoyed this article?

You might enjoy:

Was this article too low-level? Try this one:

New to AI?