Demystifying Machine Learning (ML) and Artificial Intelligence (AI) for Business Leaders

Since OpenAI released ChatGPT in November 2022, AI has become the coolest (or at least, most used) acronym of the day. As a business leader, one might wonder what the terms thrown around mean and how to separate truth from hype. This post explains machine learning and AI terminology in simple language, to be able to get a bird’s-eye view of the landscape.

How is Machine Learning related to AI?

Systems such as human behavior, language, or unstructured information have been unpredictable and difficult to emulate – until now. The human brain has long been able to cope with complexity and figure out patterns to navigate life. It has been the dream and pursuit of scientists to mimic human intelligence and create machines that can think and act as humans. That is the focus of AI.

Machine learning, a subset of AI, uses data and computing power to develop models representing patterns and relationships in the data. The computer learns to identify patterns using different techniques outlined in a later section, called model training. A trained model can produce useful information from new data without explicit programming. That can be identifying classes of objects from their pictures, making predictions from past data, separating important information from fluff, or generating new data (as we know with the new generative AI trend).

There are other, non-machine learning AI techniques, such as search algorithms, rules engines, natural language processing, expert systems that emulate human abilities in specific areas, game playing algorithms, and so on.

Why is AI moving so quickly now?

Machine learning and AI have been around for a very long time. As early as 1950, Alan Turing discussed the problem of building a machine that could think. In the beginning, the type of computing needed was either very expensive, or largely unavailable. When GPUs came into existence with the gaming industry, their use for machine learning accelerated research and innovation in the field. GPUs allow for parallel processing of the iterative computations needed in training models for machine learning. GPUs and access to large amounts of data led to the AI revolution we see now.

Discovery of the transformer architecture had a similar effect on natural language processing, leading to the current Large Language Model (LLM) explosion.

What is Machine Learning?

The core of any machine learning technique is the training data. For example, if we would like our model to determine if the image we present it is that of a cat, we would first collect a set of images, some with cats and some without. We would then label the images as cats and not cats, and use that data to train the model. If the trained model predicts 95 out of 100 newly presented images correctly, we can say that it has a 95% prediction accuracy.

For the more mathematically inclined, machine learning can be thought of as trying to fit an equation with a large number of variables (also known as features) to a set of training data. Fitting involves determining the coefficients of the equation such that it can later make predictions on new data. Most of the time, the process used to determine the coefficients is iterative. The iterations terminate when the error between model predictions and training data falls below specified limits.

Unlike computer programming that gives computers specific instructions to execute, and is deterministic, machine learning is probabilistic. Results in ML depend as much on the quality of the data used for training, as the training mechanism or model, and the governance over the model (allowed error in the model).

Classification of Machine Learning algorithms (models)

Broadly, machine learning algorithms can be grouped into:

Supervised learning: where pre-labeled data as in the cat image example above, helps the machine learn to determine what class a particular dataset belongs to, or predict new values. This method can be used in classification (for e.g., marking emails as spam), prediction (for e.g., determining the price of a house given its location and other features), and forecasting (determining trends based on past data).

Unsupervised learning: where the machine independently determines patterns from data without prior labels, grouping values into clusters of similar features. This method can be used in anomaly/fraud detection, recommendation engines, customer clustering based on purchasing behaviors, categorizing news articles, and so on.

Semi-supervised learning: where a small amount of labeled data is combined with a large amount of unlabeled data to train the model. Learning from the labeled dataset is used to label the unlabeled set. This method aims to uncover the underlying natural structure in the data. It is very useful in situations where it is tedious to label data, such as in protein sequence classification, or fraud detection.

Reinforcement learning: where the machine learns by trial and error while interacting with its environment and observing the response, to operate in a manner that optimizes some reward. For example, large language models can use reinforcement learning for fine-tuning, to generate more ethical or context-specific content.

A different classification of Machine Learning models

With the current AI trend, it also helps to look at machine learning models through a different lens:

A Discriminative model learns to classify (associate labels with) data by determining the boundaries between data points. It does not learn anything about the data points themselves. It predicts a label given the data. Discriminative models are usually used for supervised machine learning.

A Generative model on the other hand learns about the intrinsic structure of the data (labels and features). This enables it to generate new data with similar features. In short, it is able to generate data given a label. Generative models are usually used for unsupervised and semi-supervised learning.

A couple of well-known ML algorithms

It helps to understand a couple of often-mentioned ML techniques that power modern AI. They are:

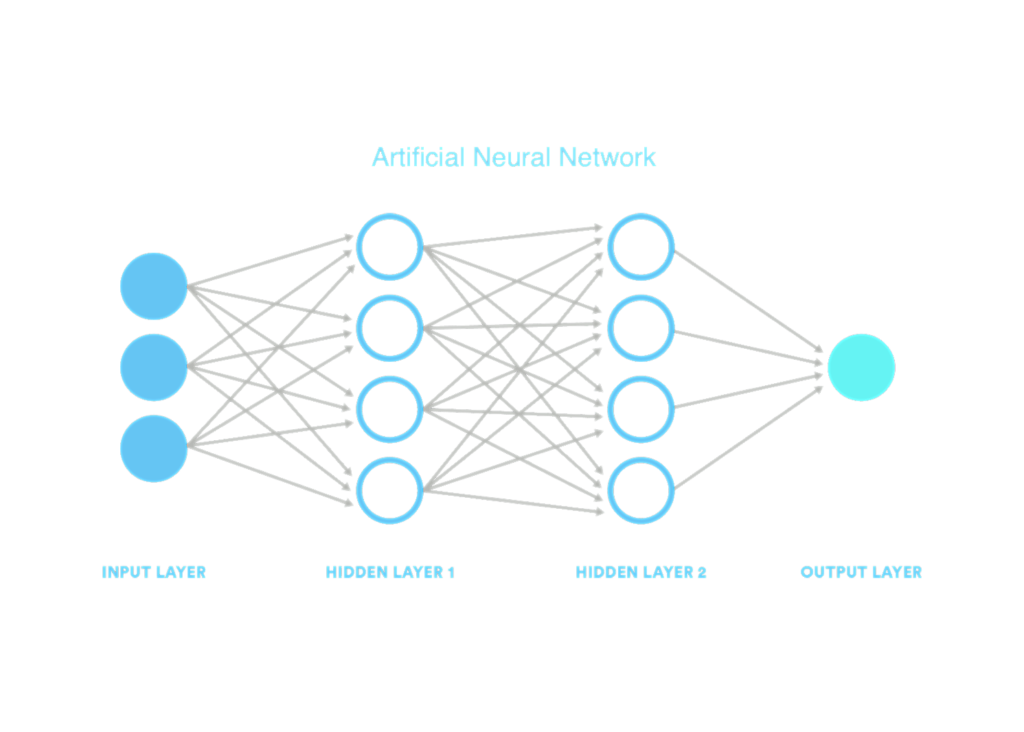

Neural Network: This is a technique that has revolutionized ML, using nodes to mimic the neurons in a human brain. In its simplest form, it has three layers of nodes, an input layer, a hidden layer, and an output layer. Data is fed into the input layer, and transformed using weights and activation functions before moving to the next layer. The output layer computes the desired output of the algorithm. For example, computer vision can use a convolutional neural network to recognize images.

Deep learning: This is a technique using neural networks with several hidden layers (hence deep). It more closely models how a human brain would work (than a simple network), and powers most modern AI applications.

More about Large Language Models (LLMs) and Generative AI

LLMs are a class of generative models that can comprehend and create text. Generative models use neural networks to generate new content, previously thought to be the realm of humans in creative pursuits. They can create images and text very similar to the data used to train them. LLMs learn a probability distribution over next tokens (representing words) to generate them when prompted.

A couple of terms that one might hear in this space are transformers and diffusion models. A transformer is a neural network architecture used in most LLMs, allowing them to provide context-accurate content. A diffusion model is a special ML technique where the model learns to generate images from noise, by iteratively removing the noise. Diffusion models can use transformers, but usually use other architectures.

Common uses of ML

Machine Learning can help with

- content recommendation

- image analysis and object detection

- fraud detection such as identifying spam

- medical imaging and diagnostics

- computer assisted surgical instruments

and many other applications.

In particular, generative models (a subset of ML) can help with

- documentation

- code generation

- automated help such as chatbots

- customer support

- image and video creation

- music generation

and other creative pursuits.

Takeaways

This post touches the tip of the iceberg called AI (which uses ML in many situations). What one might take away from this post is:

- ML is a subset of AI

- ML is not computer programming in its classic sense (using explicit instructions to execute tasks)

- ML needs data, a good model, and usually more computing power than a normal program (especially when training the model)

- ML is only as good as the data used to train the model and the governance used (allowed error)

- AI and ML have inherent error, so especially in high risk/human safety situations, human oversight is important.

AI is going to change our future. Hope this post helps you choose wisely when you need AI and ML in your solutions.

Unlock the Power of AI Engineering

From optimizing manufacturing materials to analyzing and predicting equipment maintenance schedules, see how we’re applying custom AI software solutions.

You Might Also Like