A while ago I wrote on a topic that, technically, I’m not super qualified to write about. That topic was agglutination and it was a ton of fun for me, despite my lack of linguistics training, because I’m passionate about language. Today I’m going to fold that passion (about language) into something I’m educated in (computer science) and present a few (several) examples of Natural Language Processing (NLP) that you (the reader) might be familiar with.

NLP is a subset of Machine Learning (ML) that is focused primarily on human (as apposed to computer) language applications. Like other ML disciplines, NLP leans heavily on statistical models to draw insights from bodies of data. You feed these models a bunch of hand-curated data (a “training corpus”) to “train” them. Once a model is trained you can test new data against the model to gain insights.

It’s these insights that are the real draw of NLP, but the problems themselves might not be super well understood outside of NLP practitioners. That’s the purpose of this article: talking about some of the common problems that NLP is a great fit for by pointing out practical examples we’ve all encountered. My hope is that, through examples, we can better develop an intuition for the types of problems NLP is suited for.

With that, let’s start with something we’re all familiar with:

Question answering

The year was 1996. The World Wide Web was but a young thing. The Cowboys beat the Steelers in SuperBowl XXX, or so says Wikipedia. It was the year of Independence Day, Twister, Mission Impossible, and Jerry Maguire.

Incidentally, remember that scene where Will Smith is dragging the alien through the desert in his parachute and he yells “and what the hell is that smell?!” and then kicks the alien a bunch? That line wasn’t in the script. The scene was filmed near the Great Salt Lake in Utah, and when the wind blows juuust right it will pick up and carry the scent of the millions of decomposing brine shrimp in the lake, and Will Smith was reacting to that smell. Wonderful.

Anyway, in 1996 Ask Jeeves was an internet search engine that you could type plain English into and get reasonable responses, which was a completely new capability. This was accomplished through semantic analysis, another NLP process – or, more specifically, question answering. You would type “where can i find flowers to send my mother on mother’s day” and it would pick up the general gist and show you links for flower shops and probably tell you when mother’s day is going to be.

It was a novel idea and it worked fairly well for less complicated queries, but you’ll notice they’re not around anymore so maybe people don’t want a natural language interface to their search engines. Moving on.

OCR

If you’ve ever been behind someone at the grocery store who pays with a check, you both understand my rage and also have seen OCR in action.

Checks in the US have been immediately, electronically processed since 2004. It’s done via a tiny machine that sits near the point-of-sale equipment. You may have seen it: the cashier puts the check into the machine; the check whips in and out super quickly, and then the cashier puts the check into the till.

What’s happening? Well, the machine is scanning the front face of the check, but it’s also pulling the routing and account numbers from the bottom of the check. If you still have checks you should take a look. Those numbers are in a very specific font that machines can read pretty easily. Once the register has the account numbers (and it knows the total already) it will electronically apply the check. The paper copy is basically unused.

I will not admit publicly to owning one of those machines for research purposes.

OCR (optical character recognition) is a machine vision technique that analyzes pixels of an image of written/printed letters and numbers and comes up with a digital representation of that writing. It can also be used to scan entire books, journals, even (sometimes) handwritten notes.

Classification

“Does this thing belong in this bucket or that bucket?” is a very important question in NLP (and ML in general). These types of questions are classification problems and a quintessential example in NLP is spam detection.

For those who are reading a printout of this article after the collapse of the internet, “spam” is unwanted correspondence (usually with the intent of selling or scamming) that people started getting in abundance as the cost of delivery approached $0. Filtering out Actual Correspondence from the noise of randomly generated emails laden with viruses or coupons for medication became pretty arduous, so some very smart people turned to a solution based on statistics.

By feeding a statistical model with plenty of examples of “spam” and “not spam” (and clearly labeling which is which) you can train the model to detect which bucket new correspondence belongs into, with some high level of certainty. You may have heard of Bayesian spam filtering – this is the type of one of the first statistical models built to help detect and classify unwanted email.

There are many other question that can be answered by NLP classification techniques:

- Did a specific person author this specific text?

- How was the person feeling when they wrote this?

- What gender was the author of this text?

- What genre does this text belong to?

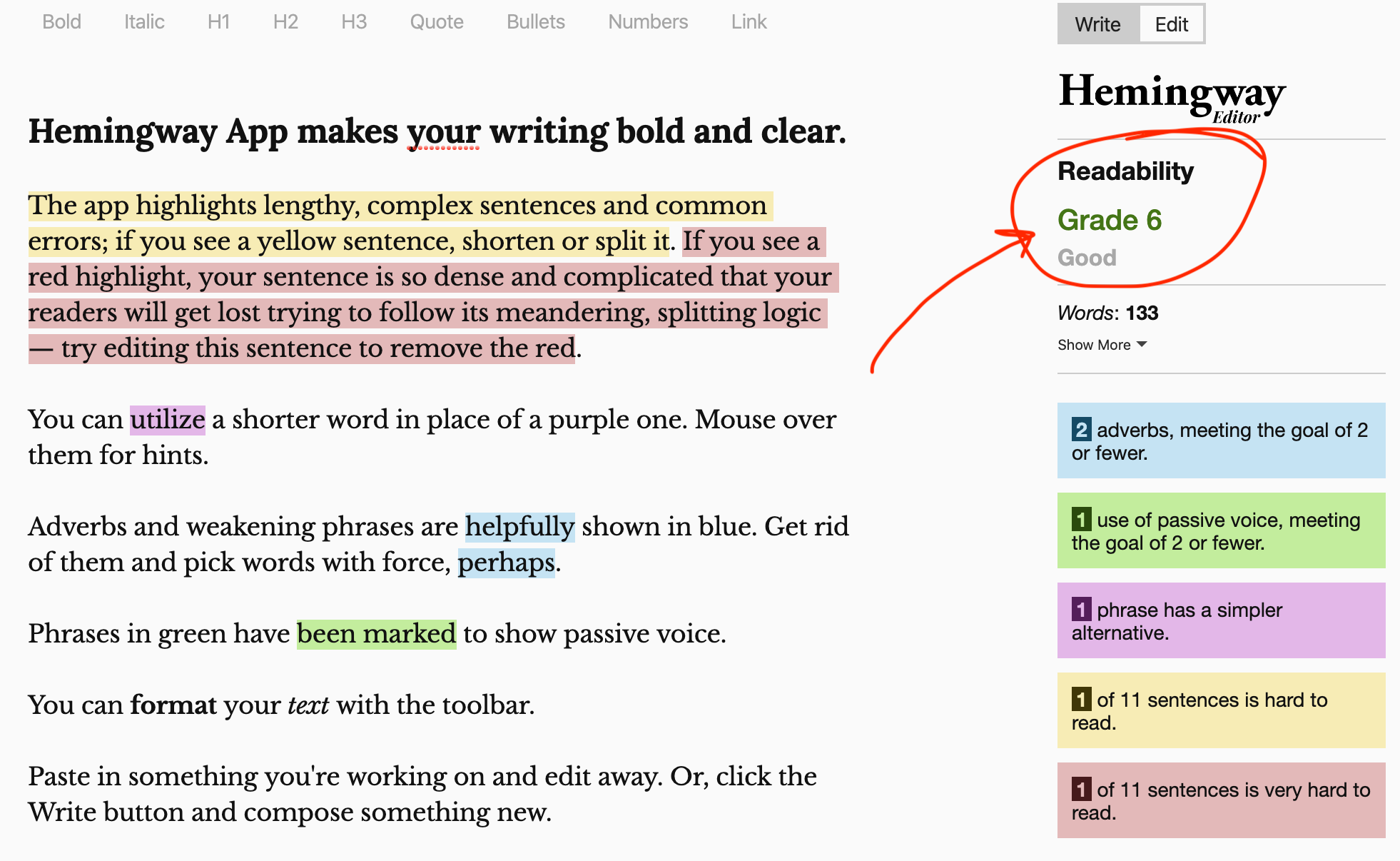

And my favorite classification application: readability analysis! If you’ve never seen the Hemmingway App, peruse this picture:

This screengrab not only demonstrates a few cool applications of NLP classifiers (readability score, “hard to read” sentences) and other syntactic analysis concepts like part-of-speech tagging (“2 adverbs”) but you can also see another feature of NLP solutions: “your” is tagged as misspelled. Remember, you’re dealing with statistical models and you will have false-positives and false-negatives, so make sure there’s a tolerance for error in your system before you start applying NLP tools.

Human interaction

Designed by researchers who want to punish people with young children, pharmacy robot menus are intelligent phonecall answering systems that listen to your speech and decipher what service you want to access. “I want to refill a prescription” you might say, over your 8-year old yelling about his day at school. “I did not understand you,” the robot would respond. Then you mash zero on the number pad until you can speak to an actual human.

There are many applications of NLP here, including speech recognition, text-to-speech, and yet another categorization (there are finite actions a caller wants to execute; which is the most likely given a particular word or phrase?).

Most people are familiar with speech recognition, as digital assistance such as Siri and Google Assistant can compose texts, emails, etc. with reasonable accuracy. And, at least in the context of phone menu systems, there has been automated speech generation for decades: “Your prescription will be ready, by, FIVE…. THIRTY… SEVEN… AM. Have a great day!”

The newer menu systems take advantage of some really cool developments in synthesis techniques, mostly built on concatenation synthesis. Instead of recording someone saying “Your prescription will be ready by” and then clips of them saying every number, the speech is synthesized from a pool of smaller units of sound, such as diphones. There are also advances being made in the prosodic (melodic) aspect of generated speech, which makes the phone systems sound more “human”.

And so

We’ve covered a lot of ground but it only scratches the surface of NLP’s abilities when it comes to language analysis. The list of examples I’ve cultivated are on the more practical end of the NLP spectrum. There are many, many examples of NLP usage for the more esoteric, language-specific applications, such as “given a word like ‘baking’, what is the root?” (“bake”) or “given some text that covers multiple topics, where does one topic end and the next begin?”

The latter questions are endlessly fascinating to people with an interest in language, but it’s hard to make it sound exciting to those whose recollections of middle-school sentence diagrams are accompanied by a shudder.

In closing, could your application benefit from:

- the ability to parse a natural sentence in order to react to it automatically?

- scanning and reacting to written text?

- categorizing a chunk of text into specific buckets, such as: (spam/not spam), (the writer was happy/the writer was not happy), (6th/7th/8th grade reading level)?

- the ability to speak to a person, with a dynamic script?

- the ability to record and react to human speech?

If so, it may be time to repress those sentence-diagram shudders and start leaning into NLP!

Unlock the Power of AI Engineering

From optimizing manufacturing materials to analyzing and predicting equipment maintenance schedules, see how we’re applying custom AI software solutions.

You Might Also Like